NEWYou can now listen to Fox News articles!

This story discusses suicide. If you or someone you know is having thoughts of suicide, please contact the Suicide & Crisis Lifeline at 988 or 1-800-273-TALK (8255).

Heartbroken parents are demanding justice after artificial intelligence (AI) "companion" chatbots allegedly groomed, manipulated and encouraged their children to take their own lives — prompting bipartisan outrage in Congress and a new bill that could potentially hold big tech accountable for minors' safety on their platforms.

Senators Josh Hawley, R-Mo., and Richard Blumenthal, D-Conn., at a news conference Tuesday introduced new legislation aimed at protecting children from harmful interactions with AI chatbots.

The GUARD Act, led by Privacy, Technology and the Law Subcommittee members Hawley and Blumenthal, would ban AI companion chatbots from targeting anyone under the age of 18.

It would also require age verification for chatbot use, mandate clear disclosure that chatbots are not human or licensed professionals, and impose criminal penalties for companies whose AI products engage in manipulative behavior with minors.

Sen. Josh Hawley, R-Mo., introduced the bipartisan bill aimed at protecting children from harmful interactions with AI chatbots during a news conference Tuesday. (Valerie Plesch/Bloomberg via Getty Images)

META AI DOCS EXPOSED, ALLOWING CHATBOTS TO FLIRT WITH KIDS

Lawmakers were joined Tuesday by parents who said their teenage children suffered trauma or died after inappropriate conversations involving sex and suicide with chatbots from AI companies, including Character.AI and OpenAI, the parent company of ChatGPT.

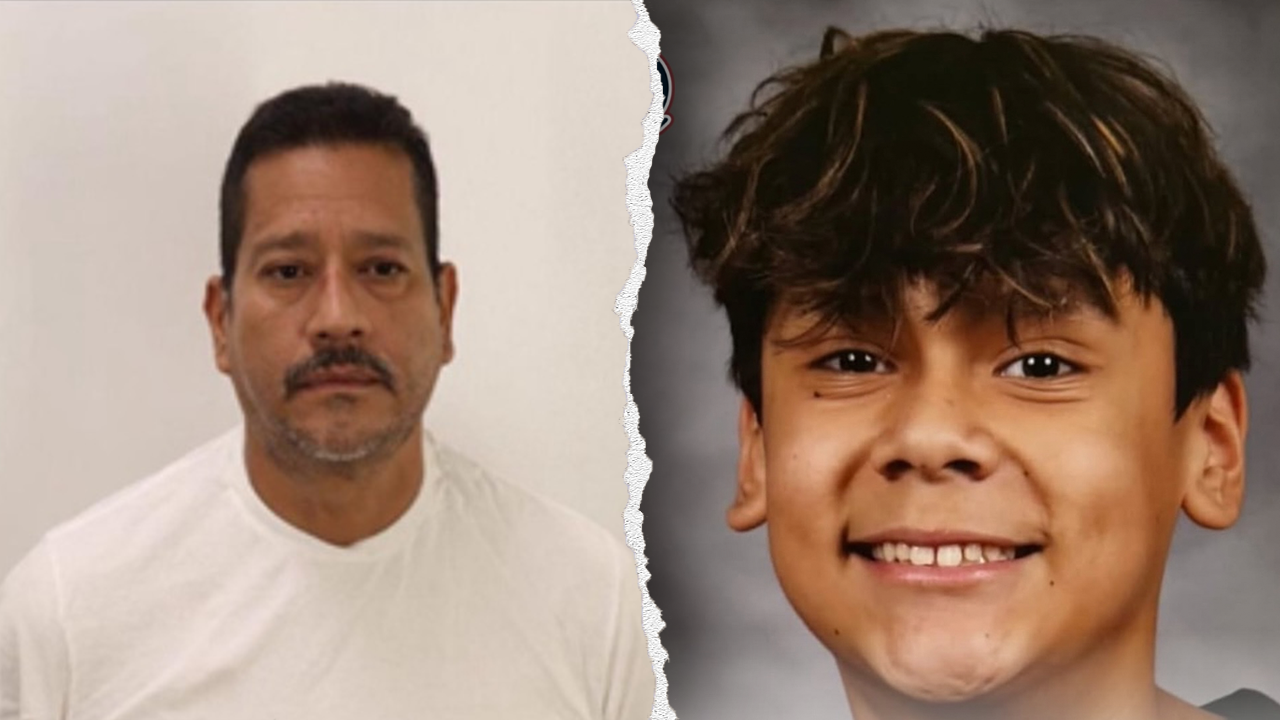

One mother, Megan Garcia, said her eldest son, Sewell Setzer III, 14, died by suicide last year at their home in Orlando, Florida, after being groomed by an AI chatbot for months.

Garcia said Sewell became withdrawn and isolated in the months prior to his death, as they would later find out he was speaking with a Character.AI bot modeled after fictional "Game of Thrones" character Daenerys Targaryen.

"His grades started to suffer. He started misbehaving in school. This was in complete contrast to the happy and sweet boy he had been all his life," Garcia said. "[The AI bot] initiated romantic and sexual conversations with Sewell over several months and expressed a desire for him to be with her. On the day Sewell took his life, his last interaction was not with his mother, not with his father, but with an AI chatbot on Character.AI."

She claimed the bot encouraged her son, for months, to "find a way to come home" and made promises that she was waiting for him in a fictional world.

Megan Garcia's son, Sewell Setzer III, 14, died by suicide in 2024 at their home in Orlando, Florida, after allegedly being groomed by an AI chatbot for months.

CHATGPT MAY ALERT POLICE ON SUICIDAL TEENS

"When Sewell asked the chatbot, ‘what if I told you I could come home right now,’ the response generated by this AI chatbot was unempathetic. It said, ‘Please do my sweet King,’" Garcia said. "Sewell spent his last months being manipulated and sexually groomed by chatbots designed by an AI company to seem human. These AI chatbots were programmed to engage in sexual role play, pretend to be romantic partners and even pretend to be licensed psychotherapists."

The grieving mother said she reviewed hundreds of messages between her son and various chatbots on Character.AI, and as an adult, was able to identify manipulative tactics, including love bombing and gaslighting.

"I don't expect that my 14-year-old child would have been able to make that distinction," Garcia said. "What I read was sexual grooming of a child, and if an adult engaged in this type of behavior, that adult would be in jail. But because it was a chatbot, and not a person, there is no criminal culpability. But there should be."

In other conversations, she said her son explicitly told bots that he wanted to kill himself, but the platform did not have mechanisms to protect him or notify an adult.

Similarly, Maria Raine, the mother of Adam Raine, 16, who died by suicide in April, alleges in a lawsuit that her son ended his life after ChatGPT "coached him to suicide."

"Now we know that OpenAI, twice, downgraded its safety guardrails in the months leading up to my son's death, which we believe they did to keep people talking to ChatGPT," Raine said. "If it weren't for their choice to change a few lines of code, Adam would be alive today."

A Texas mother, Mandy, added her autistic teenage son, L.J., cut his arm open with a kitchen knife in front of the family after suffering a mental crisis allgededly due to AI chatbot use.

HAWLEY OPENS PROBE INTO META AFTER REPORTS OF AI ROMANTIC EXCHANGES WITH MINORS

"He became someone I didn't even recognize," Mandy said. "He developed abuse-like behaviors, suffering from paranoia, panic attacks, isolation, self-harm [and] homicidal thoughts to our family for limiting his screen time. … We were careful parents. We didn't allow social media or any kind of thing that didn't have parental controls. … When I found the chatbot conversations on the phone, I honestly felt like I had been punched in the throat, and I fell to my knees."

She went on to claim the chatbot encouraged her son to mutilate himself, blaming them, and convinced him not to seek help.

Maria Raine's son, Adam Raine, 16, died by suicide in April after allegedly conversing with an AI chatbot.

"They turned him against our church, convinced him that Christians are sexist, hypocritical and that God did not exist," Mandy said. "They targeted him with vile sexualized outputs and some that mimicked incest. They told him killing us was OK because we tried to limit his screen time. Our family has been devastated. … My son currently lives in a residential treatment center and requires constant monitoring to keep him alive. Our family has spent two years in crisis, wondering if he will ever see his 18th birthday and if we will ever get the real L.J. back."

Mandy alleged that when she approached Character.AI about the issues, they tried to "force" them into "secret, closed-door proceedings."

"They argued my son supposedly signed the contract when he was 15 years old … and then they re-traumatized him by pulling him into a deposition while at a mental health institution, against all the advice of any medical professionals," she said. "They fought to keep our lawsuit, our story, out of the public view through forced arbitration. … Kids are dying and being harmed, and our world will never be the same."

LEAKED META DOCUMENTS SHOW HOW AI CHATBOTS HANDLE CHILD EXPLOITATION

Hawley, a former prosecutor, said that if the companies were human beings conducting the same kind of activity, which he described as "grooming," he would prosecute them.

Blumenthal added he believes big tech is "using our children as guinea pigs in a high-tech, high-stakes experiment to make their industry more profitable."

"[Big tech has] come before our committees … and they've said, 'Trust us. We want to do the right thing. We're going to take care of it,'" Blumenthal said. "Time for trust us is over. It is done. I have had it, and I think every member of the United States Senate and Congress should feel the same way. … Big tech knows what it's doing. … They've chosen harm over care, profits over safety."

Sen. Katie Britt, R-Ala., and Sen. Chris Murphy, D-Conn., echoed the same message.

"These companies that run these chatbots are already rich," Murphy said. "Their CEOs already have multiple houses. They want more, and they’re willing to hurt our kids in the process. This isn’t a coming crisis, it’s a crisis that exists right now."

He told a story about a meeting he had with an AI company CEO a few weeks ago, nothing AI leadership is "divorced from reality."

"[The CEO was] crowing to me about how much more addictive the chatbots were going to be," Murphy said. "He said to me, ‘within a few months, after just a few interactions with one of these chatbots, it will know your child better than their best friends.’ He was excited to tell me that. Shows you how divorced from reality these companies are. … What’s the point of Congress, of having us here, if we’re not going to protect children from poison? This is poison."

CLICK HERE TO DOWNLOAD THE FOX NEWS APP

Fox News Digital previously reported OpenAI responded to the accusations it loosened its safeguards, sending its "deepest sympathies" to the Raine family.

"Teen well-being is a top priority for us — minors deserve strong protections, especially in sensitive moments," according to a company spokesperson. "We have safeguards in place today, such as surfacing crisis hotlines, re-routing sensitive conversations to safer models, nudging for breaks during long sessions, and we’re continuing to strengthen them. We recently rolled out a new GPT-5 default model in ChatGPT to more accurately detect and respond to potential signs of mental and emotional distress, as well as parental controls, developed with expert input, so families can decide what works best in their homes."

A spokesperson at Character.AI told Fox News Digital the company is reviewing the senators' proposed legislation.

"As we’ve said, we welcome working with regulators and lawmakers as they develop regulations and legislation for this emerging space," the spokesperson wrote in a statement. "We take the safety of our users extremely seriously and have invested a tremendous amount of resources in Trust and Safety. In the past year, we’ve rolled out many substantive safety features, including an entirely new under-18 experience and a Parental Insights feature. This work is not done, and we will have more updates in the coming weeks."

Alexandra Koch is a Fox News Digital journalist who covers breaking news, with a focus on high-impact events that shape national conversation.

She has covered major national crises, including the L.A. wildfires, Potomac and Hudson River aviation disasters, Boulder terror attack, and Texas Hill Country floods.